Ovis2

This model was released on 2024-05-31 and added to Hugging Face Transformers on 2025-08-18.

Overview

Section titled “Overview”The Ovis2 is an updated version of the Ovis model developed by the AIDC-AI team at Alibaba International Digital Commerce Group.

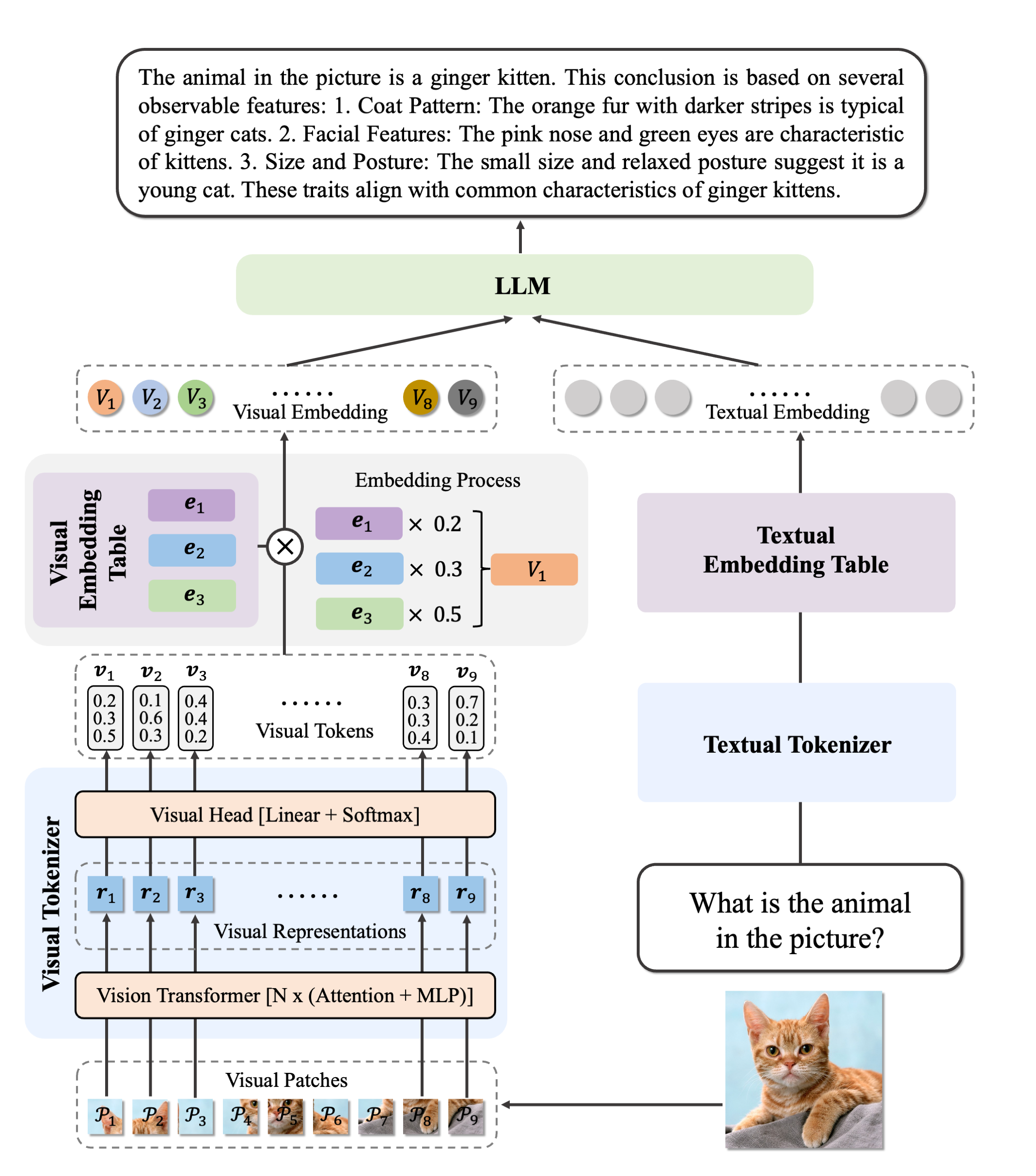

Ovis2 is the latest advancement in multi-modal large language models (MLLMs), succeeding Ovis1.6. It retains the architectural design of the Ovis series, which focuses on aligning visual and textual embeddings, and introduces major improvements in data curation and training methods.

Ovis2 architecture.

This model was contributed by thisisiron.

Usage example

Section titled “Usage example”from PIL import Imageimport requestsimport torchfrom torchvision import iofrom typing import Dictfrom transformers.image_utils import load_images, load_videofrom transformers import AutoModelForImageTextToText, AutoTokenizer, AutoProcessorfrom accelerate import Accelerator

device = Accelerator().device

model = AutoModelForImageTextToText.from_pretrained( "thisisiron/Ovis2-2B-hf", dtype=torch.bfloat16,).eval().to(device)processor = AutoProcessor.from_pretrained("thisisiron/Ovis2-2B-hf")

messages = [ { "role": "user", "content": [ {"type": "image"}, {"type": "text", "text": "Describe the image."}, ], },]url = "http://images.cocodataset.org/val2014/COCO_val2014_000000537955.jpg"image = Image.open(requests.get(url, stream=True).raw)messages = processor.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)print(messages)

inputs = processor( images=[image], text=messages, return_tensors="pt",)inputs = inputs.to(model.device)inputs['pixel_values'] = inputs['pixel_values'].to(torch.bfloat16)

with torch.inference_mode(): output_ids = model.generate(**inputs, max_new_tokens=128, do_sample=False) generated_ids = [output_ids[len(input_ids):] for input_ids, output_ids in zip(inputs.input_ids, output_ids)] output_text = processor.batch_decode(generated_ids, skip_special_tokens=True) print(output_text)Ovis2Config

Section titled “Ovis2Config”[[autodoc]] Ovis2Config

Ovis2VisionConfig

Section titled “Ovis2VisionConfig”[[autodoc]] Ovis2VisionConfig

Ovis2Model

Section titled “Ovis2Model”[[autodoc]] Ovis2Model

Ovis2ForConditionalGeneration

Section titled “Ovis2ForConditionalGeneration”[[autodoc]] Ovis2ForConditionalGeneration - forward

Ovis2ImageProcessor

Section titled “Ovis2ImageProcessor”[[autodoc]] Ovis2ImageProcessor

Ovis2ImageProcessorFast

Section titled “Ovis2ImageProcessorFast”[[autodoc]] Ovis2ImageProcessorFast

Ovis2Processor

Section titled “Ovis2Processor”[[autodoc]] Ovis2Processor