NLLB

This model was released on 2022-07-11 and added to Hugging Face Transformers on 2022-07-18.

Overview

Section titled “Overview”NLLB: No Language Left Behind is a multilingual translation model. It’s trained on data using data mining techniques tailored for low-resource languages and supports over 200 languages. NLLB features a conditional compute architecture using a Sparsely Gated Mixture of Experts.

You can find all the original NLLB checkpoints under the AI at Meta organization.

The example below demonstrates how to translate text with Pipeline or the AutoModel class.

import torchfrom transformers import pipeline

pipeline = pipeline(task="translation", model="facebook/nllb-200-distilled-600M", src_lang="eng_Latn", tgt_lang="fra_Latn", dtype=torch.float16, device=0)pipeline("UN Chief says there is no military solution in Syria")from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("facebook/nllb-200-distilled-600M")model = AutoModelForSeq2SeqLM.from_pretrained("facebook/nllb-200-distilled-600M", dtype="auto", attn_implementation="sdpa")

article = "UN Chief says there is no military solution in Syria"inputs = tokenizer(article, return_tensors="pt")

translated_tokens = model.generate( **inputs, forced_bos_token_id=tokenizer.convert_tokens_to_ids("fra_Latn"), max_length=30)print(tokenizer.batch_decode(translated_tokens, skip_special_tokens=True)[0])echo -e "UN Chief says there is no military solution in Syria" | transformers run --task "translation_en_to_fr" --model facebook/nllb-200-distilled-600M --device 0Quantization reduces the memory burden of large models by representing the weights in a lower precision. Refer to the Quantization overview for more available quantization backends.

The example below uses bitsandbytes to quantize the weights to 8-bits.

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer, BitsAndBytesConfig

bnb_config = BitsAndBytesConfig(load_in_8bit=True)model = AutoModelForSeq2SeqLM.from_pretrained("facebook/nllb-200-distilled-1.3B", quantization_config=bnb_config)tokenizer = AutoTokenizer.from_pretrained("facebook/nllb-200-distilled-1.3B")

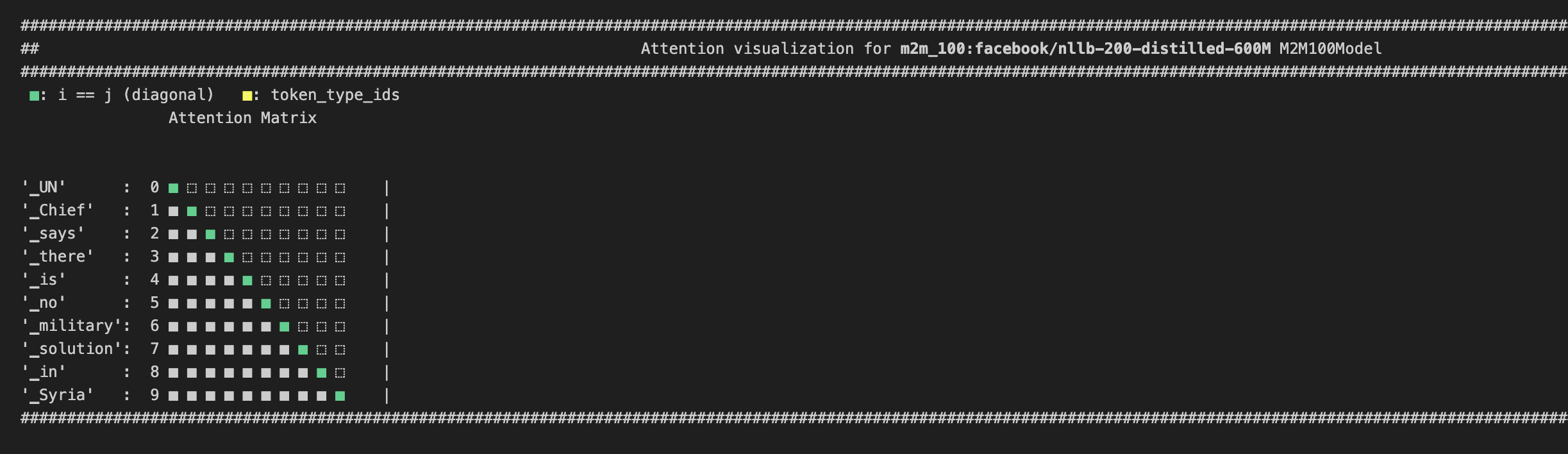

article = "UN Chief says there is no military solution in Syria"inputs = tokenizer(article, return_tensors="pt").to(model.device)translated_tokens = model.generate( **inputs, forced_bos_token_id=tokenizer.convert_tokens_to_ids("fra_Latn"), max_length=30,)print(tokenizer.batch_decode(translated_tokens, skip_special_tokens=True)[0])Use the AttentionMaskVisualizer to better understand what tokens the model can and cannot attend to.

from transformers.utils.attention_visualizer import AttentionMaskVisualizer

visualizer = AttentionMaskVisualizer("facebook/nllb-200-distilled-600M")visualizer("UN Chief says there is no military solution in Syria")

-

The tokenizer was updated in April 2023 to prefix the source sequence with the source language rather than the target language. This prioritizes zero-shot performance at a minor cost to supervised performance.

>>> from transformers import NllbTokenizer>>> tokenizer = NllbTokenizer.from_pretrained("facebook/nllb-200-distilled-600M")>>> tokenizer("How was your day?").input_ids[256047, 13374, 1398, 4260, 4039, 248130, 2]To revert to the legacy behavior, use the code example below.

>>> from transformers import NllbTokenizer>>> tokenizer = NllbTokenizer.from_pretrained("facebook/nllb-200-distilled-600M", legacy_behaviour=True) -

For non-English languages, specify the language’s BCP-47 code with the

src_langkeyword as shown below. -

See example below for a translation from Romanian to German.

>>> from transformers import AutoModelForSeq2SeqLM, AutoTokenizer>>> tokenizer = AutoTokenizer.from_pretrained("facebook/nllb-200-distilled-600M")>>> model = AutoModelForSeq2SeqLM.from_pretrained("facebook/nllb-200-distilled-600M")>>> article = "UN Chief says there is no military solution in Syria">>> inputs = tokenizer(article, return_tensors="pt")>>> translated_tokens = model.generate(... **inputs, forced_bos_token_id=tokenizer.convert_tokens_to_ids("fra_Latn"), max_length=30... )>>> tokenizer.batch_decode(translated_tokens, skip_special_tokens=True)[0]Le chef de l'ONU dit qu'il n'y a pas de solution militaire en Syrie

NllbTokenizer

Section titled “NllbTokenizer”[[autodoc]] NllbTokenizer

NllbTokenizerFast

Section titled “NllbTokenizerFast”[[autodoc]] NllbTokenizerFast