MarianMT

This model was released on 2018-04-01 and added to Hugging Face Transformers on 2020-11-16.

MarianMT

Section titled “MarianMT”MarianMT is a machine translation model trained with the Marian framework which is written in pure C++. The framework includes its own custom auto-differentiation engine and efficient meta-algorithms to train encoder-decoder models like BART.

All MarianMT models are transformer encoder-decoders with 6 layers in each component, use static sinusoidal positional embeddings, don’t have a layernorm embedding, and the model starts generating with the prefix pad_token_id instead of <s/>.

You can find all the original MarianMT checkpoints under the Language Technology Research Group at the University of Helsinki organization.

Click on the MarianMT models in the right sidebar for more examples of how to apply MarianMT to translation tasks.

The example below demonstrates how to translate text using Pipeline or the AutoModel class.

import torchfrom transformers import pipeline

pipeline = pipeline("translation_en_to_de", model="Helsinki-NLP/opus-mt-en-de", dtype=torch.float16, device=0)pipeline("Hello, how are you?")import torchfrom transformers import AutoModelForSeq2SeqLM, AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("Helsinki-NLP/opus-mt-en-de")model = AutoModelForSeq2SeqLM.from_pretrained("Helsinki-NLP/opus-mt-en-de", dtype=torch.float16, attn_implementation="sdpa", device_map="auto")

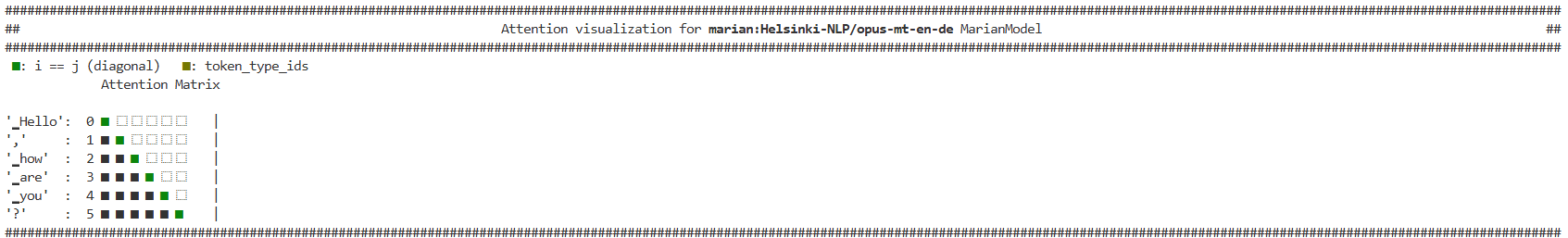

inputs = tokenizer("Hello, how are you?", return_tensors="pt").to(model.device)outputs = model.generate(**inputs, cache_implementation="static")print(tokenizer.decode(outputs[0], skip_special_tokens=True))Use the AttentionMaskVisualizer to better understand what tokens the model can and cannot attend to.

from transformers.utils.attention_visualizer import AttentionMaskVisualizer

visualizer = AttentionMaskVisualizer("Helsinki-NLP/opus-mt-en-de")visualizer("Hello, how are you?")

- MarianMT models are ~298MB on disk and there are more than 1000 models. Check this list for supported language pairs. The language codes may be inconsistent. Two digit codes can be found here while three digit codes may require further searching.

- Models that require BPE preprocessing are not supported.

- All model names use the following format:

Helsinki-NLP/opus-mt-{src}-{tgt}. Language codes formatted likees_ARusually refer to thecode_{region}. For example,es_ARrefers to Spanish from Argentina. - If a model can output multiple languages, prepend the desired output language to

src_txtas shown below. New multilingual models from the Tatoeba-Challenge require 3 character language codes.

from transformers import MarianMTModel, MarianTokenizer

# Model trained on multiple source languages → multiple target languages# Example: multilingual to Arabic (arb)model_name = "Helsinki-NLP/opus-mt-mul-mul" # Tatoeba Challenge modeltokenizer = MarianTokenizer.from_pretrained(model_name)model = MarianMTModel.from_pretrained(model_name)

# Prepend the desired output language code (3-letter ISO 639-3)src_texts = ["arb>> Hello, how are you today?"]

# Tokenize and translateinputs = tokenizer(src_texts, return_tensors="pt", padding=True, truncation=True)translated = model.generate(**inputs)

# Decode and print resulttranslated_texts = tokenizer.batch_decode(translated, skip_special_tokens=True)print(translated_texts[0])- Older multilingual models use 2 character language codes.

from transformers import MarianMTModel, MarianTokenizer

# Example: older multilingual model (like en → many)model_name = "Helsinki-NLP/opus-mt-en-ROMANCE" # English → French, Spanish, Italian, etc.tokenizer = MarianTokenizer.from_pretrained(model_name)model = MarianMTModel.from_pretrained(model_name)

# Prepend the 2-letter ISO 639-1 target language code (older format)src_texts = [">>fr<< Hello, how are you today?"]

# Tokenize and translateinputs = tokenizer(src_texts, return_tensors="pt", padding=True, truncation=True)translated = model.generate(**inputs)

# Decode and print resulttranslated_texts = tokenizer.batch_decode(translated, skip_special_tokens=True)print(translated_texts[0])MarianConfig

Section titled “MarianConfig”[[autodoc]] MarianConfig

MarianTokenizer

Section titled “MarianTokenizer”[[autodoc]] MarianTokenizer - build_inputs_with_special_tokens

MarianModel

Section titled “MarianModel”[[autodoc]] MarianModel - forward

MarianMTModel

Section titled “MarianMTModel”[[autodoc]] MarianMTModel - forward

MarianForCausalLM

Section titled “MarianForCausalLM”[[autodoc]] MarianForCausalLM - forward