GPT-NeoX-Japanese

</div>This model was released on 2022-07-27 and added to Hugging Face Transformers on 2022-09-14.

GPT-NeoX-Japanese

Section titled “GPT-NeoX-Japanese”GPT-NeoX-Japanese, a Japanese language model based on GPT-NeoX. Japanese uses three types of characters (hiragana, katakana, kanji) and has a huge vocabulary. This model uses BPEEncoder V2, a sub-word tokenizer to handle the different characters.

The model also removes some bias parameters for better performance.

You can find all the original GPT-NeoX-Japanese checkpoints under the ABEJA organization.

Click on the GPT-NeoX-Japanese models in the right sidebar for more examples of how to apply GPT-NeoX-Japanese to different language tasks.

The example below demonstrates how to generate text with Pipeline or the AutoModel, and from the command line.

import torchfrom transformers import pipelinepipeline = pipeline(task="text-generation", model="abeja/gpt-neox-japanese-2.7b", dtype=torch.float16, device=0)pipeline("人とAIが協調するためには、")import torchfrom transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("abeja/gpt-neox-japanese-2.7b", dtype=torch.float16, device_map="auto")tokenizer = AutoTokenizer.from_pretrained("abeja/gpt-neox-japanese-2.7b")input_ids = tokenizer("人とAIが協調するためには、", return_tensors="pt").input_ids.to(model.device)outputs = model.generate(input_ids)print(tokenizer.decode(outputs[0], skip_special_tokens=True))Quantization reduces the memory burden of large models by representing the weights in a lower precision. Refer to the Quantization overview for more available quantization backends.

The example below uses bitsandbytes to only quantize the weights to 4-bits.

import torchfrom transformers import AutoModelForCausalLM, AutoTokenizer, BitsAndBytesConfig

quantization_config = BitsAndBytesConfig( load_in_4bit=True, bnb_4bit_use_double_quant=True, bnb_4bit_quant_type="nf4", bnb_4bit_compute_dtype="float16")model = AutoModelForCausalLM.from_pretrained( "abeja/gpt-neox-japanese-2.7b", quantization_config=quantization_config, device_map="auto")

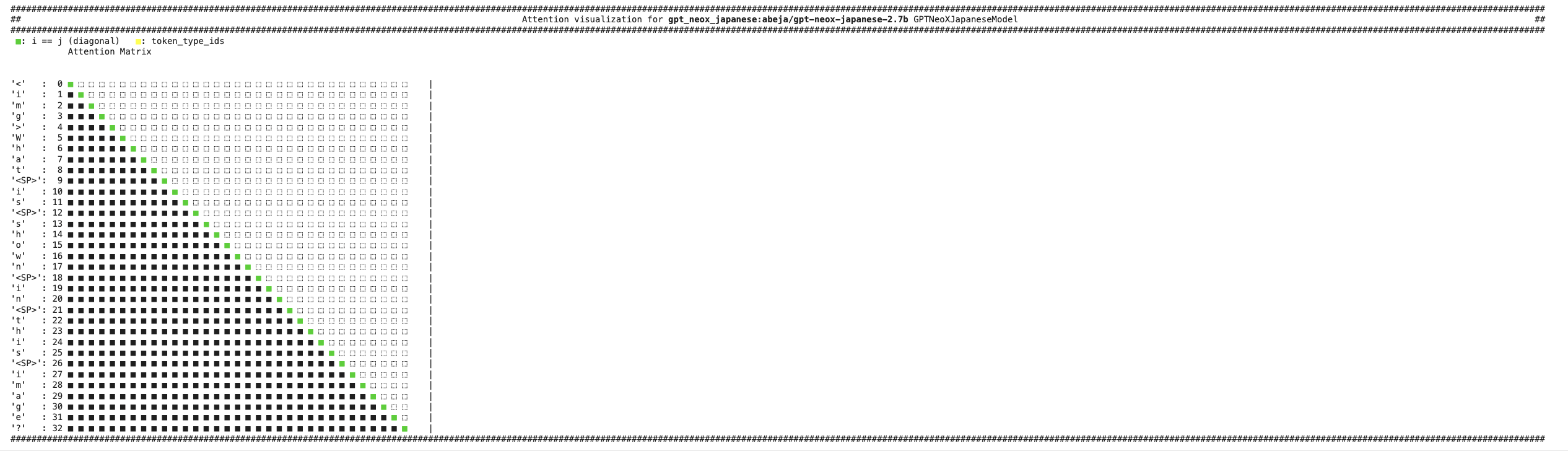

tokenizer = AutoTokenizer.from_pretrained("abeja/gpt-neox-japanese-2.7b")input_ids = tokenizer.encode("人とAIが協調するためには、", return_tensors="pt").to(model.device)output = model.generate(input_ids)print(tokenizer.decode(output[0], skip_special_tokens=True))Use the AttentionMaskVisualizer to better understand what tokens the model can and cannot attend to.

from transformers.utils.attention_visualizer import AttentionMaskVisualizer

visualizer = AttentionMaskVisualizer("abeja/gpt-neox-japanese-2.7b")visualizer("<img>What is shown in this image?")

Resources

Section titled “Resources”Refer to the Training a better GPT model: Learnings from PaLM blog post for more details about how ABEJA trained GPT-NeoX-Japanese.

GPTNeoXJapaneseConfig

Section titled “GPTNeoXJapaneseConfig”[[autodoc]] GPTNeoXJapaneseConfig

GPTNeoXJapaneseTokenizer

Section titled “GPTNeoXJapaneseTokenizer”[[autodoc]] GPTNeoXJapaneseTokenizer

GPTNeoXJapaneseModel

Section titled “GPTNeoXJapaneseModel”[[autodoc]] GPTNeoXJapaneseModel - forward

GPTNeoXJapaneseForCausalLM

Section titled “GPTNeoXJapaneseForCausalLM”[[autodoc]] GPTNeoXJapaneseForCausalLM - forward